MIDI visualization, a case study

Table of Contents

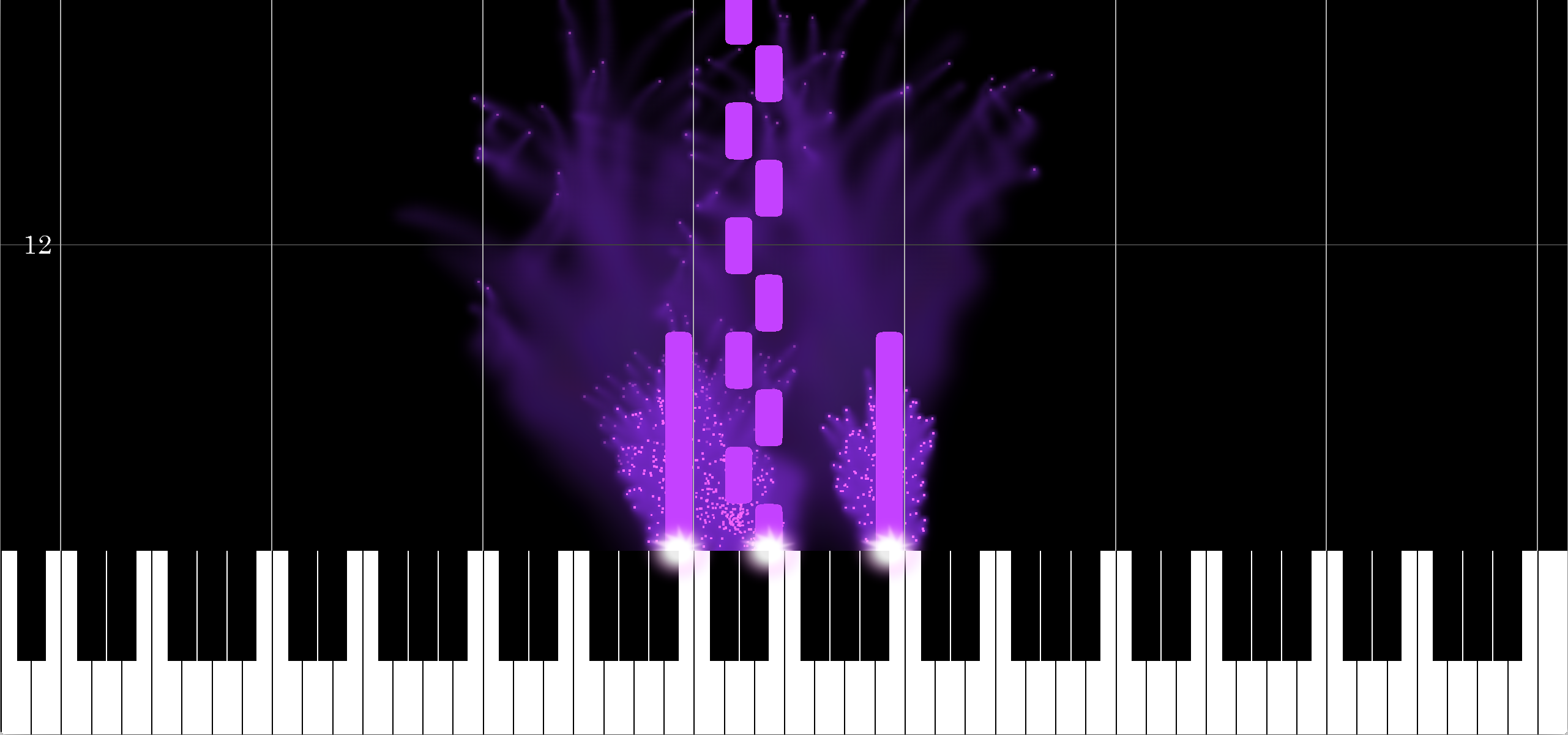

Recently, inspired by my partner starting to practice piano again and showing me this video, I challenged myself to build a small MIDI visualizer. I wanted to be able to display a MIDI track as an animated score, with moving notes, similar to the one in the video. The main development steps were:

- loading a MIDI file, and parsing the notes contained,

- displaying a scrolling score with these notes,

- adding visual effects to embellish the visualization.

MIDI processing

At the beginning of the project, I decided to implement my own MIDI parser rather than to rely on one of many available libraries. This helped me get a better understanding of the MIDI specification, and turned out to be viable as I only needed to parse notes and a small subset of the many additional information contained in the files.

MIDI was designed at its core as a communication protocol, to allow digital music instruments to communicate and sync with each other, and integrate into the music recording pipeline (mixing tables, computers,...). Thus, a MIDI sequence is a stream of packets, each representing an event: a change in tempo, connection/deconnection of an instrument, the beginning/end of a note (note on and note off events), and so on.

MIDI files were designed by simply defining a group of tracks, each containing a MIDI stream of time-stamped packets, along with a few metadata blocks. The specification is not complex per se, but exhibits many types of events and specific cases[1].

The parser I implemented has nothing specific or brilliant to it: the file is parsed as a byte stream, all events are extracted along with the metadata, into a series of C++ structures. The parsing is straightforward, the only gotchas I had to face were the fact that to save space, multiple consecutive events of the same type can be concatenated under certain conditions[2], and the fact that some instruments denote the end of a note as a note on event with a null velocity.

I assume for conveniency that we want to display all the notes of the record; all tracks are thus merged into one, and filtered to only keep the notes and tempo events [3]. Then, I pair note on and note off events based on their note and timestamps, to create a Note structure containing the note value, its start time and its duration in seconds, computed using the timestamps, beat and current tempo. Notes are stored in an array.

Display

All the elements displayed on screen are using the same geometry: a unique rectangle, instanced/copied in various positions and scales for each element to show ; this means only four vertices and six indices are uploaded to the GPU[4].

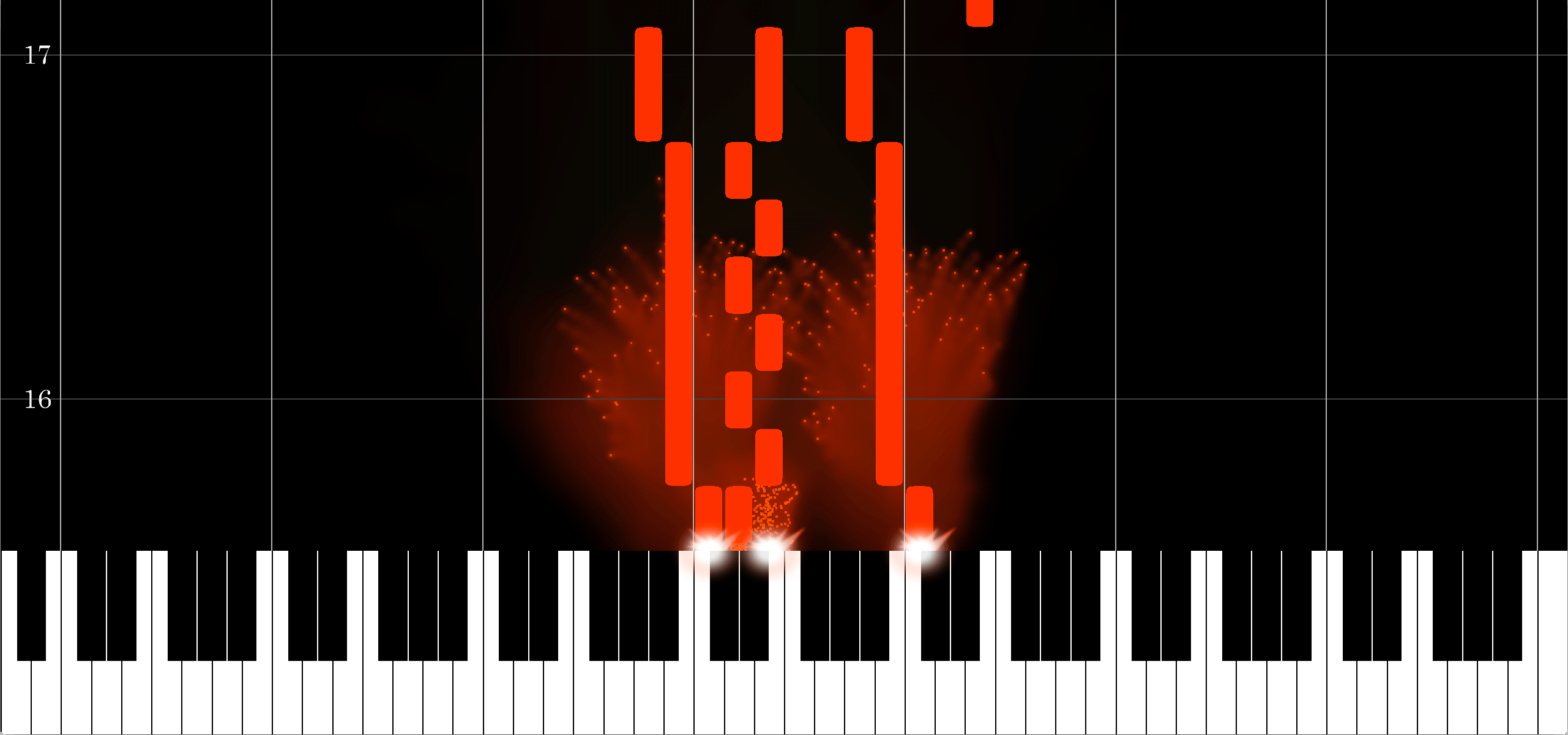

Notes

For each note of the MIDI score, a rectangle is instanciated on screen, aligned with the corresponding key, and whose height is proportional to the note duration. At any given time, notes that have ended or start too far in the future (and are in both case out of screen) are discarded early.

To soften their look, the corner of each rectangle are rounded, by discarding the area outside of the super-ellipse defined by the following formula (where x and y are the local coordinates of the current fragment, w and h the size of the note, r the corner radius):

A base color (user-defined) is used for major keys, and a slightly darker version is used for minor ones. To make the notes stand out more over the other effects, they are given a thin lighter contour.

Background

The background is made using a single rectangle covering the screen. All visual features are directly computed in the shader, based on the screen position of each pixel. The lower fourth of the screen is used to represent the keyboard with a series of tests to determine if each pixel is black or white, as being part of a major/minor key, or a separating line. The octave and measure separations are overlaid as 2-pixels-width lines. The measure count is displayed by first computing the current measure based on time, decomposing the number into its digits, and copying the bitmap representation of each of these from a small font atlas (shown below).

Effects

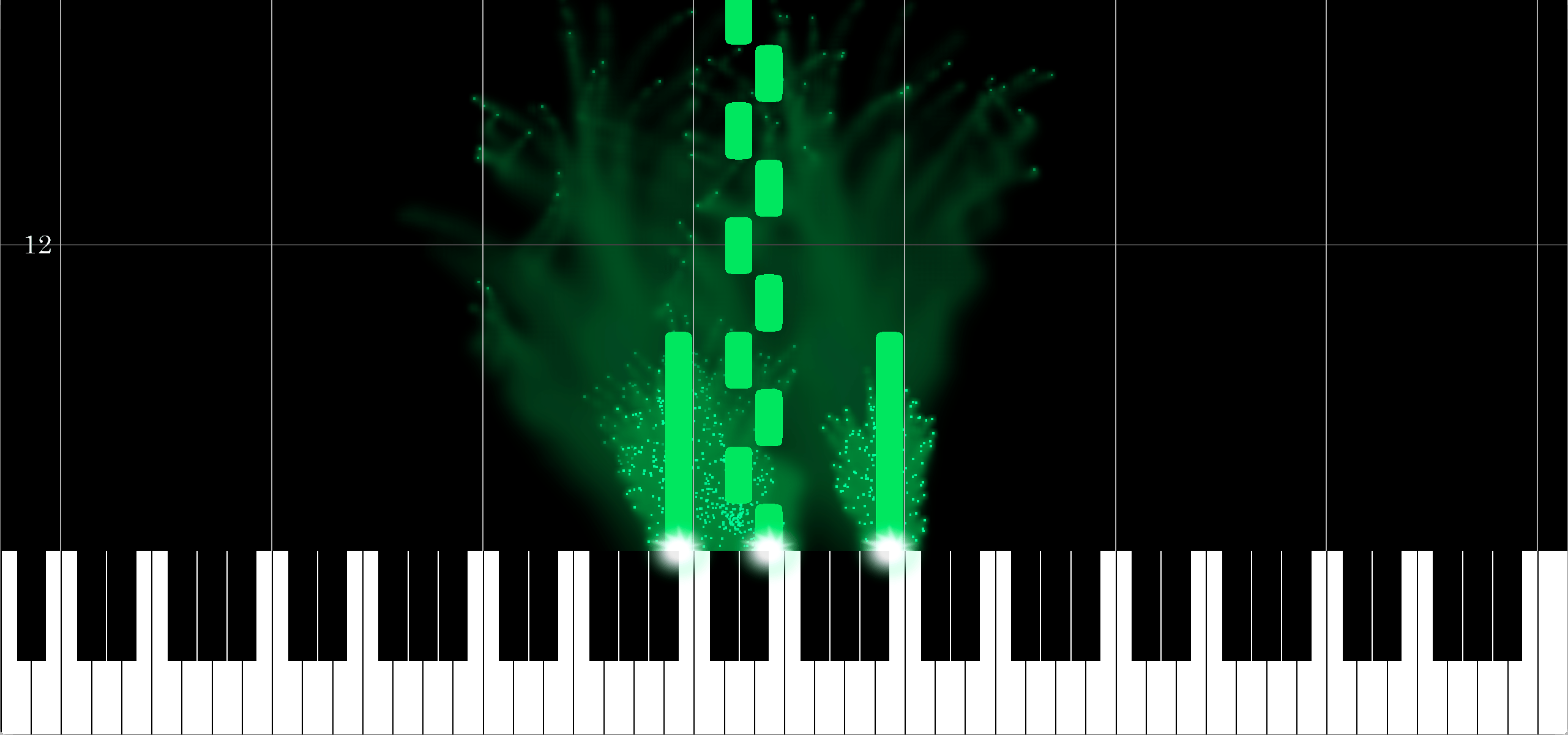

To improve the look of the visualization, I added effects similar to those shown in the initial video. This was an exercise in re-creation ; I focused on three effects: animated flashes and bursts of particles when notes are played, and a fading residual trail linked to the particles.

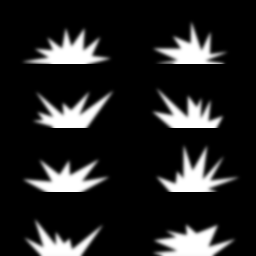

Flashes

While a note is playing, a flashing effect is displayed on the top edge of the keyboard, where the note's rectangle intersects with it. Each key is associated to a rectangle covering this area on screen, and the flashes are activated in real-time based on the current active notes, through a synced data buffer updated each frame. The animation quickly cycles through a few flash sprites ; a gradient is applied to obtain a halo effect around the spark.

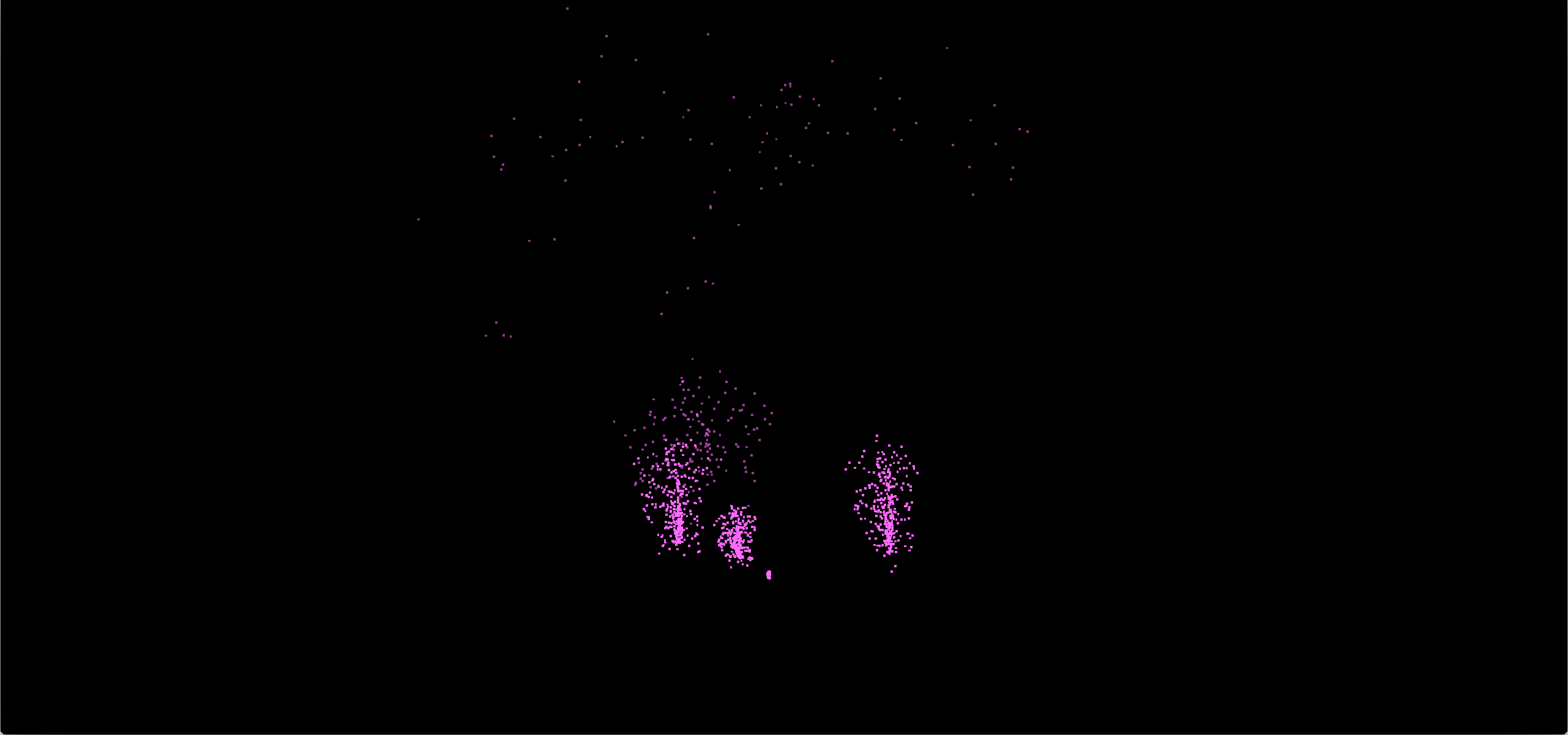

Particles

At the same time as a note flash is displayed, a stream of particles is emitted from the same location. One could choose to run a full real-time physics simulation[5] to animate each particles system. I used a simpler trick: draw many trajectories by hand on separate layers in Photoshop, and extract positions regularly along each trajectory. We make the assumption all particles move in the [-1,1]x[0,1] rectangle. Using a Matlab script, each trajectory is followed, and the successive corresponding 2D positions are converted to colors: the red and green channels hold the x and y coordinates, the blue channel is used to denote that a trajectory is finished. The positions for each trajectory are stacked up ; we obtain a texture where each line corresponds to the position of a particle over time.

Each rendered particle linearly reads a given line of the texture, attributed at random, and converts the color back to a 2D position. Towards the end of the particle's life, a fading is applied to make it disappear.

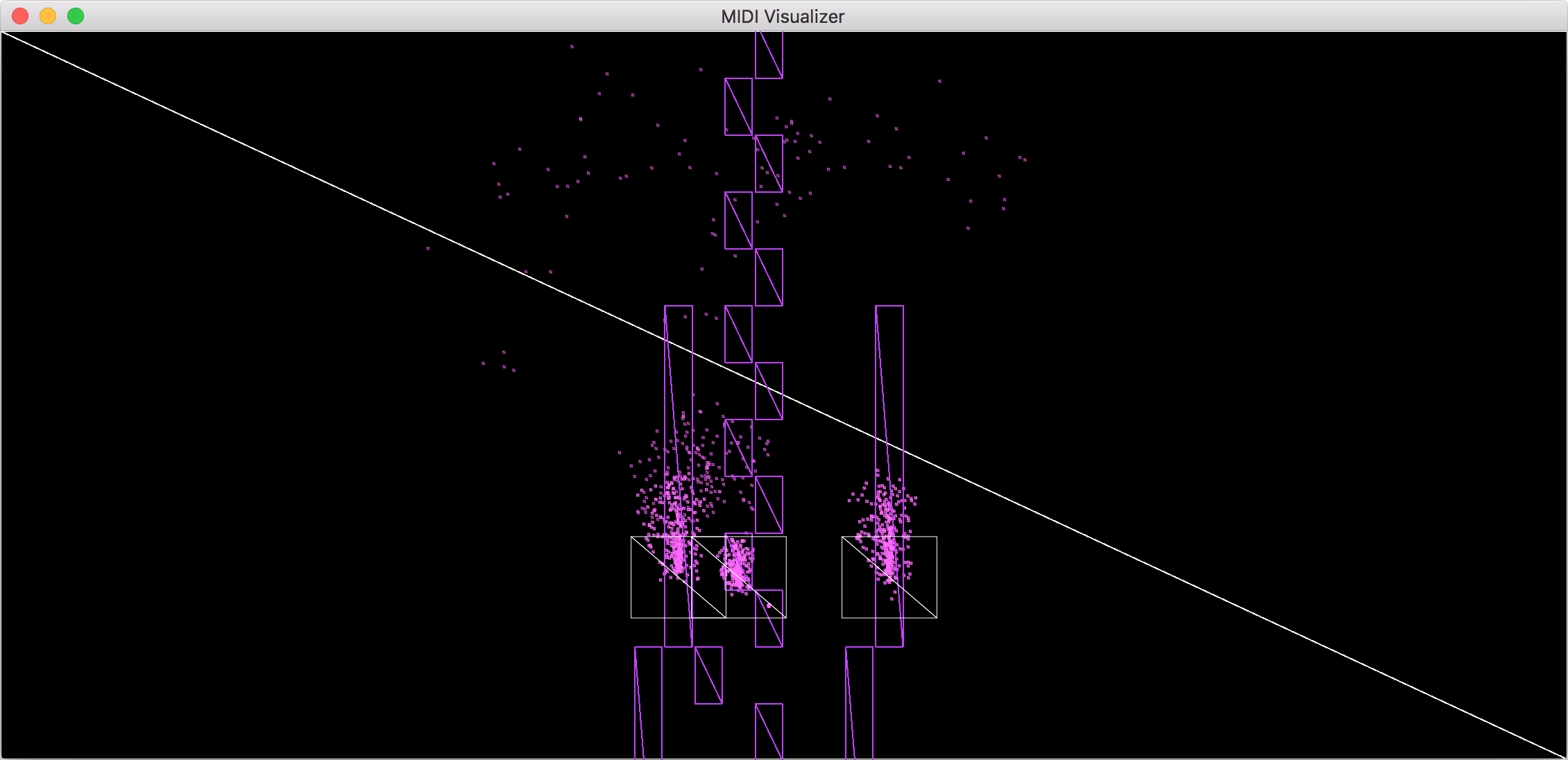

Fading trail effect

FInally, the third effect is designed to enhance the particles visibility and movements. Each particle leaves behind it a colored trail, slowly fading. To achieve this the particles are drawn to a separate framebuffer[6], over the blurry trails obtained from the previous frame. The result is blurred using a wide radius box blur[7], and slightly faded out. The result is then used as a background for the main scene, and will be used as an input to the next frame's trails rendering step, as shown below. This way, particles from previous frames are accumulated, giving slowly fading and spreading splats of color.

Conclusion

This project was an interesting way to try a few new things: particles using texture for positions, fade-out using accumulation in a separate framebuffer, and obviously MIDI parsing! The finalized executable can load any MIDI file and display it using a user-defined base color. There is no music playing at the same time, as this would be a whole other adventure...

Update: the source is now available on Github.

-

multiple file sub-formats, for instance: one track, multiple tracks, raw recorded stream. ↩

-

a fact that my initial documentation did not specify... ↩

-

as these impact the duration of the following notes. ↩

-

OpenGL instanced drawing gives us the ability to display multiple copies of this rectangle with per-instance parameters. ↩

-

either on the CPU or the GPU. ↩

-

a virtual screen that can be drawn onto, and used as a texture later. ↩

-

faster than a gaussian blur of the same radius. ↩