Coming back to Uru, part 2

Last week, I decided to implement a 3D viewer for Uru, a game in the Myst series. The goal is to load and visualize assets from the game[1]: images, objects,... I have succeeded into loading meshes and textures from the .prp assets files to RAM and then to the graphic processor.

Meshes are composed of vertices and triangles. They are stored in one huge list for each scene, with descriptors pointing to where each object is in this list. More specifically, any object in the game level can be composed of multiple sub-elements, each pointing to a different region of this list. In it, each vertex is associated with a position, a normal, a color and multiple texture coordinates. This is a format similar to what the GPU expects, and the translation was easy. The most difficult part was handling the fact that some models have vertex coordinates already placed in the final scene, while others have to be transformed at runtime, and others again have constraints applied (for instance so they always face the player, for special effects). Below is a rendering of the reference scene, as a wireframe mesh, and displaying the surface normal components as colors.

Meanwhile, textures are stored in a special compressed format pre-optimized for storage in the GPU memory. The DXT format was mainly designed for DirectX, but OpenGL also support it through a very common extension ; loading it is as simple as loading a standard texture with an additional flag.

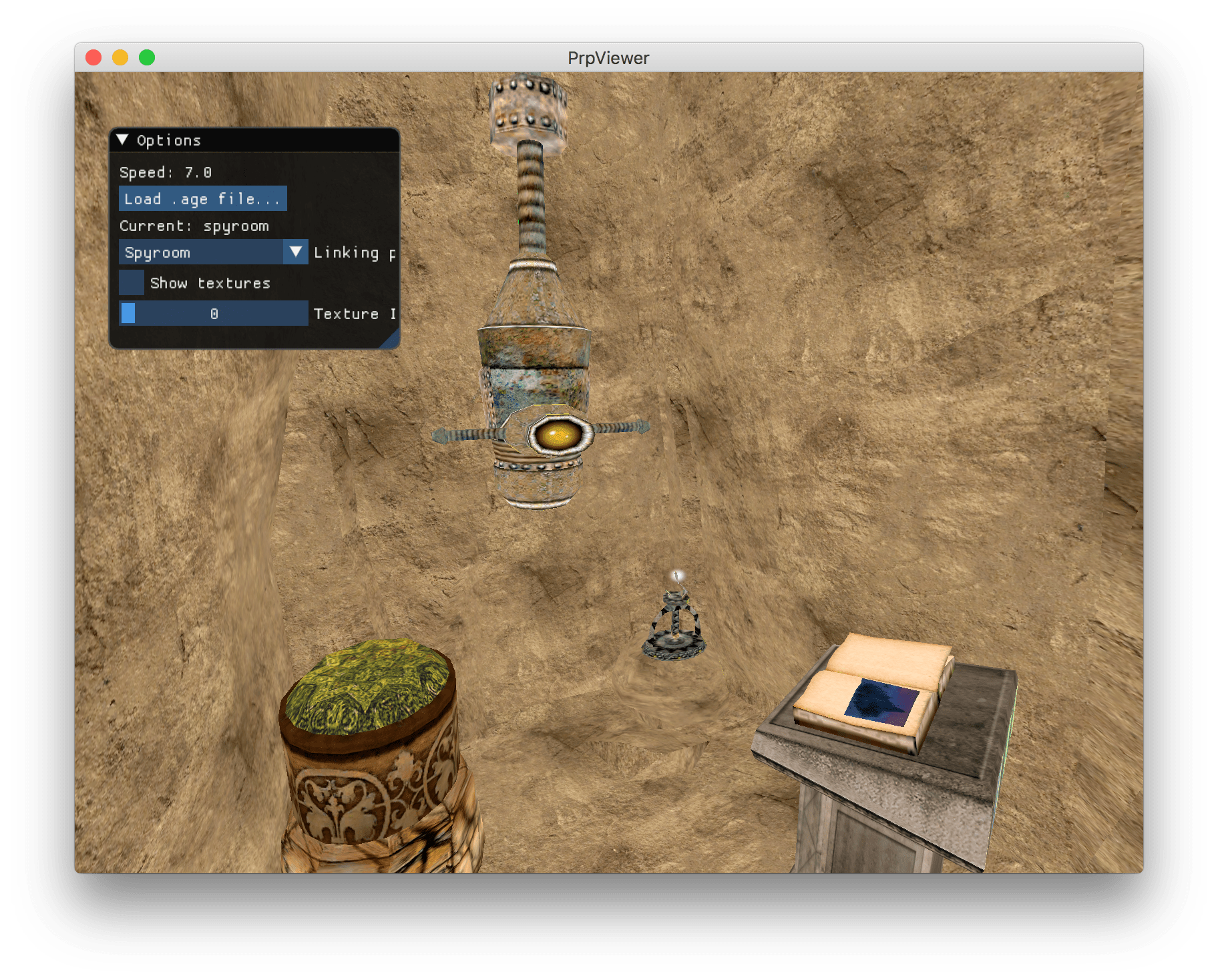

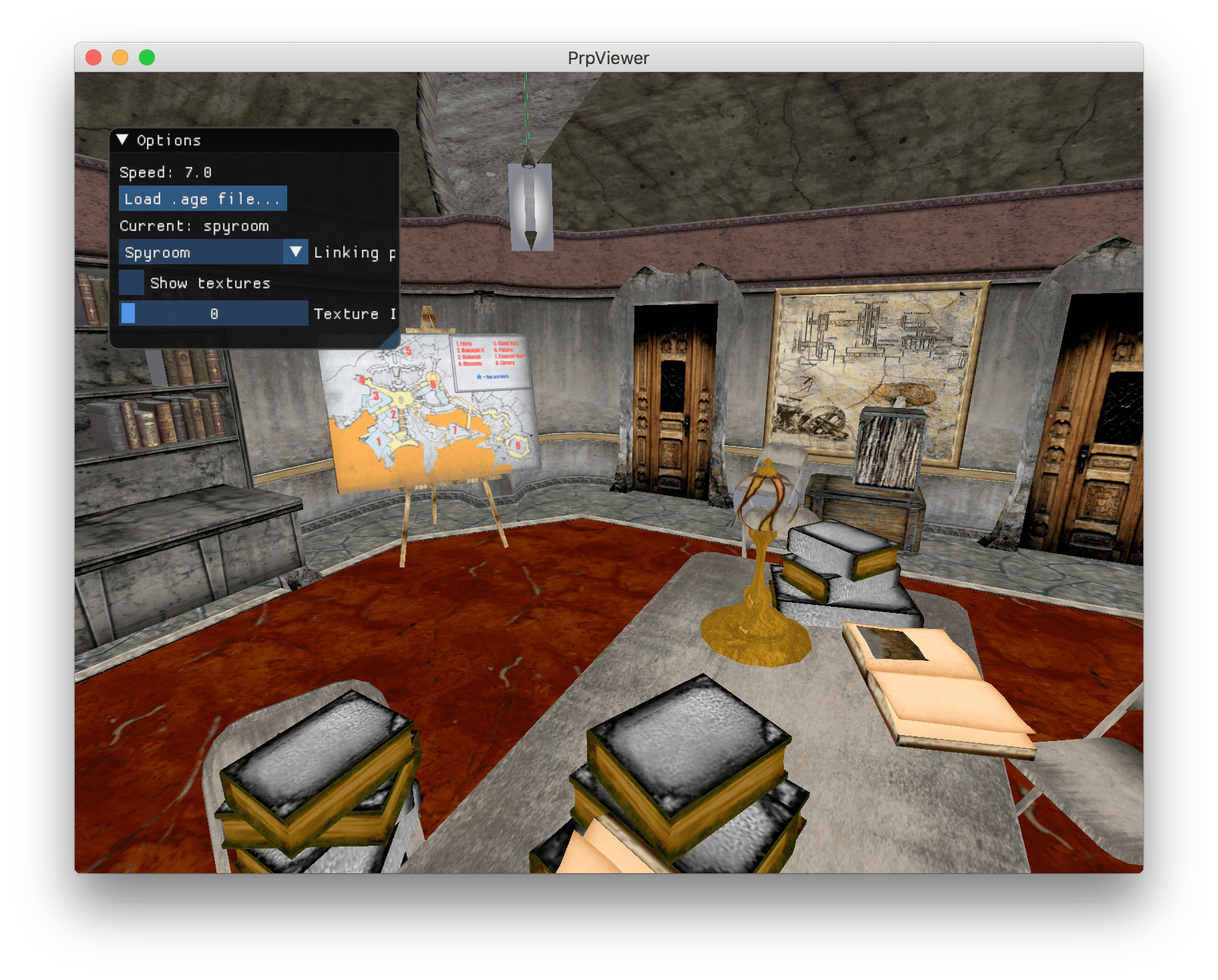

Once all data are sent to the GPU, we can start combining them and perform rendering of textured meshes. In practice each sub-element can have a complex material composed of multiple texture and effects layers, blended in multiple ways. For now, I simply use the base layer of each element as a texture.

The result is a good start but remains incomplete because of the lack of shading and multi-layered materials. In the original engine, these were rendered in multiple passes using DirectX fixed-function pipeline. This is a legacy method where lighting computations and texture operations are predefined; and the equivalent in OpenGL cannot be used anymore[2]. The next step will thus be to find a way to emulate this approach with shaders, custom code used for rendering in the current graphics pipeline. Also, some of the worlds can contain thousands of objects and rendering them is already taking a hit on the performances ; I will need to implement culling in order to only tell the GPU to render objects that are visible on screen at a given moment.

Finally, just a quick shoutout to the ImGui code library, that I have been using for the debug interface. It is so easy to use and integrate in you codebase, while being efficient and customizable.